Hello. I'm Supriya from Fujitsu Research of India, and I'm Oura from the Artificial Intelligence Laboratory.

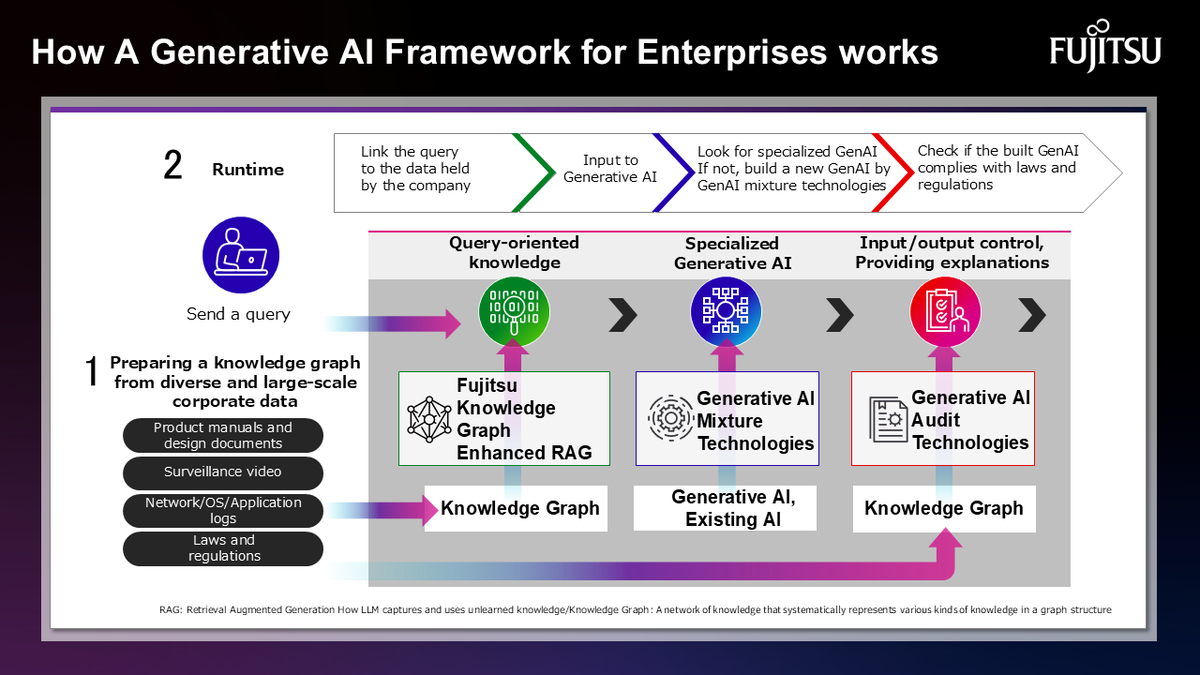

To promote the use of generative AI at enterprises, Fujitsu has developed a generative AI framework for enterprises that can flexibly respond to diverse and changing corporate needs and easily comply with the vast amount of data held by a company and laws and regulations. The framework was successively launched in July 2024 as part of Fujitsu Kozuchi (R&D)'s AI service lineup.

Some of the challenges that enterprise customers face when leveraging specialized generative AI models include:

- Difficulty handling large amounts of data required by the enterprise

- Generative AI cannot meet cost, response speed, and various other requirements

- Requirement to comply with corporate rules and regulations

To address these challenges, the framework consists of the following technologies:

- Fujitsu Knowledge Graph enhanced RAG ( *1 )

- Amalgamation Technology

- Generative AI Audit Technology

In this series, we introduce the "Fujitsu Knowledge Graph enhanced RAG". We hope this helps you to solve your problems. At the end of the article, we'll also tell you how to try out the technology.

Fujitsu Knowledge Graph Enhanced RAG Technology Overcomes the Weakness of Generative AI that Cannot Accurately Reference Large-Scale Data

Existing RAG techniques for making generative AI refer to related documents, such as internal documents, have the challenge of not accurately referencing large-scale data. To solve this problem, we have developed Fujitsu Knowledge Graph enhanced RAG (hereinafter, Fujitsu KG enhanced RAG) technology that can expand the amount of data that can be referred to by LLM from hundreds of thousands to millions of tokens to more than 10 million tokens by developing existing RAG technology and automatically creating a knowledge graph that structures a huge amount of data such as corporate regulations, laws, manuals, and videos owned by companies. In this way, knowledge based on relationships from the knowledge graph can be accurately fed to the generative AI, and logical reasoning and output rationale can be shown.

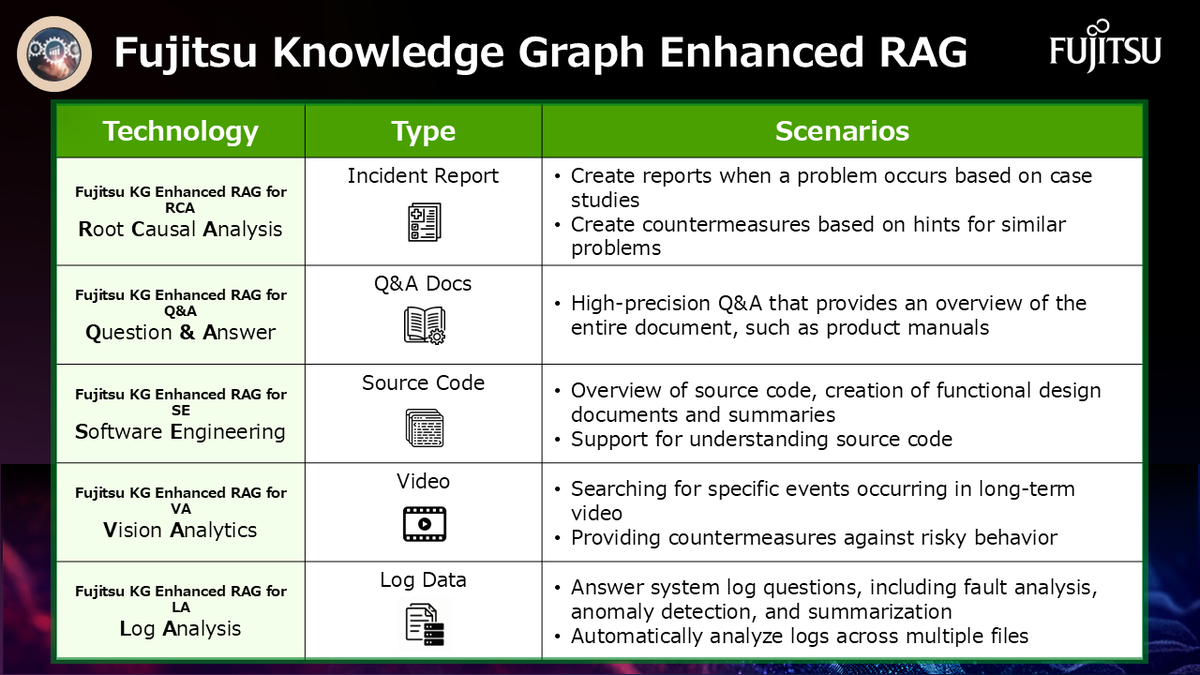

This technology consists of five technologies, depending on the target data and the application scene.

- Root Cause Analysis (Now Showing)

This technology creates a report on the occurrence of a failure based on system logs and failure case data, and suggests countermeasures based on similar failure cases. - Question & Answer (Now Showing)

This technology makes it possible to conduct advanced Q&A based on a comprehensive view of a large amount of document data such as product manuals. - Software Engineering (Now Showing)

This technology not only understands source code, but also generates high-level functional design documents, summaries, and enables modernization. - Vision Analytics (Now Showing)

This technology can detect specific events and dangerous actions from video data, and even propose countermeasures. - Log Analysis (This article)

This technology can answer various questions related to system logs in natural language, including fault cause analysis, anomaly detection, and summarization.

In this article, I will introduce 5. Log Analysis (hereinafter, LA) in detail.

What is the Fujitsu KG Enhanced RAG for LA

The Stakes: When Every Second of Doubt Costs Thousands.

A single mis-configured cache can topple an e-commerce checkout pipeline in seconds. While dashboards dutifully report HTTP 500 spikes, they rarely tell operations teams why the failure erupted or where it started. Meanwhile, customers abandon carts, executive war-rooms convene, and the business bleeds an estimated $5,600 per minute of downtime (Gartner). Traditional log search tools index millions of lines but treat them as isolated text—stripping away the sequence and context that transform raw data into causality.

Fujitsu KG enhanced RAG for LA upends that paradigm. By converting every log line into a temporal hypergraph, the platform turns chaotic machine data into a living storyline that pinpoints root causes in seconds and elevates IT diagnostics from reactive search to proactive insight.

Logs Re-imagined as a Temporal Hypergraph.

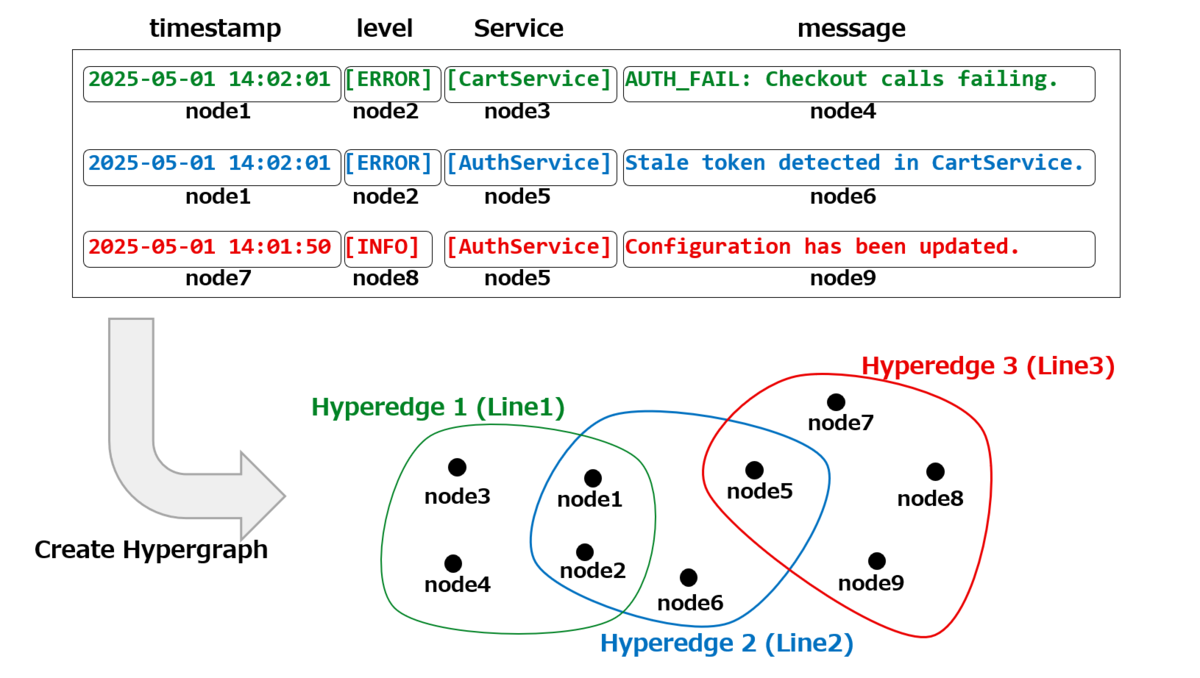

This section introduces a temporal hypergraph, which is used as a knowledge graph in Fujitsu KG enhanced RAG for LA.

Nodes & Hyperedges. Every attribute–value pair—Timestamp=14:02:01, Level=ERROR, Service=Cart, Message=AUTH_FAIL...—becomes a node. Each log entry binds its nodes with a hyperedge, a structure capable of linking multiple entities at once. Because an attribute can recur across thousands of lines, nodes become shared junctions that naturally expose cross-service context.

Time Native by Design. Unlike ordinary graphs, each hyperedge retains its timestamp. The graph therefore preserves chronology and context simultaneously; investigators see precisely how events unfold instead of piecing timelines together by hand.

Why Hypergraphs Matter. Real incidents are rarely pairwise. One database lock can throttle a queue, overtax a cache, and trip a circuit breaker simultaneously. Hyperedges capture that one-to-many cascade in a single unit, making domino chains visible at a glance. When failure modes fan out across micro-services, the temporal hypergraph lays bare the full ripple effect—no manual correlation required.

From Chaos to Clarity: How the Engine Thinks.

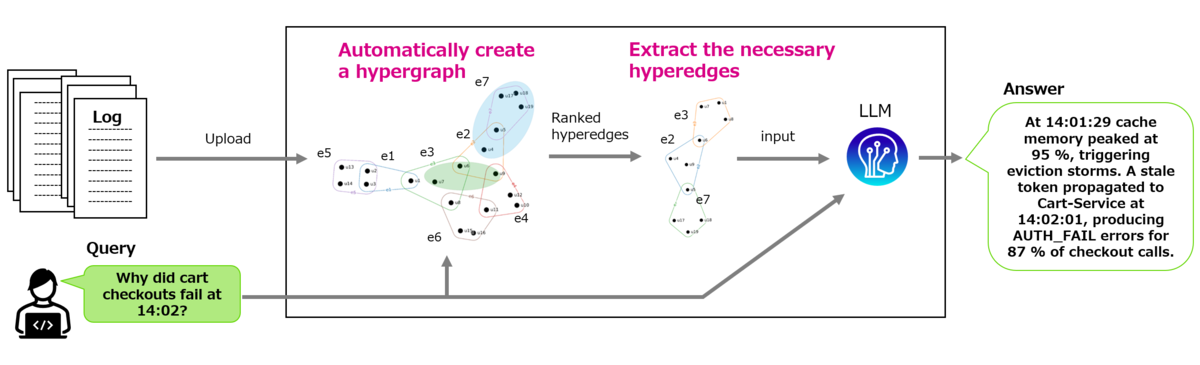

- Seed Discovery—Locating the Question’s DNA. A user asks, “Why did cart checkouts fail at 14:02?” the system embeds that query and every graph node into the same vector space, selects the most semantically related nodes, and plants them as seeds in the graph. This focuses computation on log segments capable of producing an answer.

- Multi-Stage Hyperedge Ranking—Following the Energy. An enhanced Personalized PageRank diffuses outward from those seeds, assigning weight both to semantic proximity and to structural centrality. Hyperedges central to the unfolding incident quickly rise to the top, while irrelevant chatter recedes.

- Time-Preserving Unchunking—Feeding the LLM a Perfect Story. Only the top-ranked hyperedges—often a few dozen lines—convert back to raw text in original order and flow into a lightweight language model (an 8-billion-parameter Llama 3 is ample). Because the model ingests a tight, chronologically pristine narrative, it produces precise, auditable explanations with no hallucinated leaps.

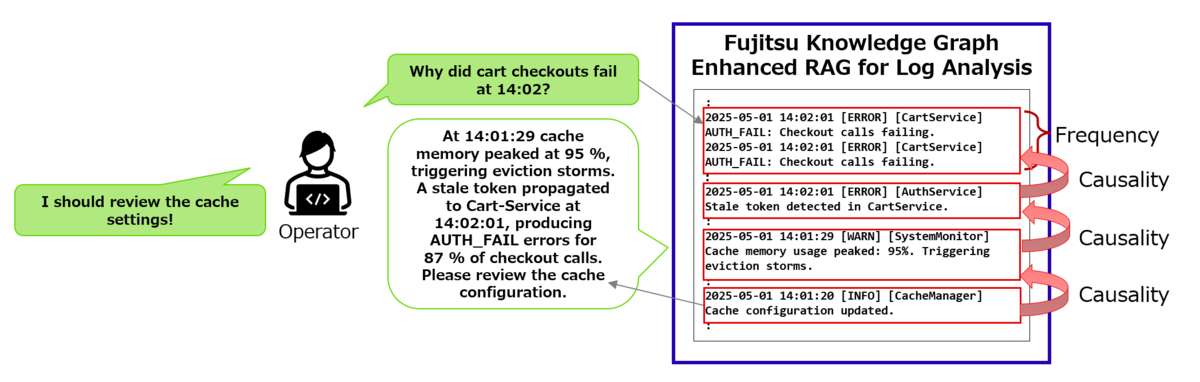

Operators receive an answer like: “At 14:01:29 cache memory peaked at 95 %, triggering eviction storms. A stale token propagated to Cart-Service at 14:02:01, producing AUTH_FAIL errors for 87 % of checkout calls. “ Every sentence maps to specific log lines in the hypergraph, giving teams immediate clarity and deep traceability.

Traditional Log Search Tools vs. Fujitsu KG Enhanced RAG for LA: Two Philosophies, One Winner.

Here, we will compare analyzing logs using traditional log search tools with analyzing logs using our technology.

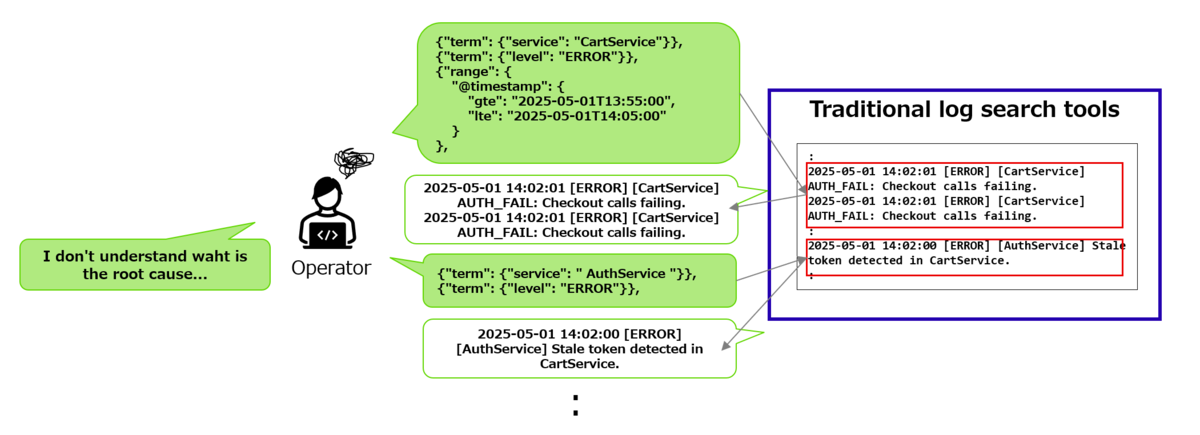

Generally, when an incident occurs, it is rare for the event and its cause to be directly linked one-to-one. With traditional log search tools, operators need to think of search queries and filter conditions as needed and repeatedly execute searches. Then, operators must check the search results and rely on information like timestamps to infer causal relationships. This process takes a lot of time, and ultimately, it may lead to a situation where the root cause remains unknown.

Our technology allows operators to input questions in a natural language, extract logs related to the question, automatically analyze the multi-layered causal relationships, and provide answers to the questions.

Next, the comparison between using Elasticsearch, one of the traditional log search tools for log analysis and using our technology is shown in the table below. Search engines remain excellent for ad-hoc look-ups. But when executives demand why something failed—not just what—only the temporal hypergraph architecture answers with conviction.

| Comparison items | Elasticsearch Approach | Fujitsu KG enhanced RAG for LA |

|---|---|---|

| Temporal integrity | Parallel ingestion often scrambles order; analysts must re-thread timelines. | Time is inherent—hyperedges come timestamped and ordered. |

| Multi-signal cascades | Pairwise correlations; misses one-to-many explosions. | Hyperedges bind all affected metrics, exposing domino chains instantly. |

| Noise & alert fatigue | Thresholds trigger on jitter; many false positives. | Seeded PageRank ignores background chatter; surfaces only pivotal edges. |

| Analyst workload | Requires manual queries, filters, dashboards. | Outputs root-cause hypotheses—no hunting required. |

| Long-horizon ripple tracing | 24-hour forecast limit for anomaly jobs. | Horizon-agnostic; can link month-old drifts to today’s outage. |

What are the Benefits of using Fujitsu KG Enhanced RAG for LA?

Tangible Wins: What Early Deployments Report.

By applying this technology, we expect to achieve the following effects.

- 75 % faster Mean-Time-To-Detect – Brownouts spotted and fixed before customers complain.

- 3× faster Post-Mortems – RCA summaries auto-generate in under an hour, freeing engineers for innovation work.

- $8 M in protected revenue – A fintech client reduced weekend outages by 40 %, safeguarding peak-transaction windows.

- 50 % drop in paging noise – Operations staff report clearer signals and a calmer on-call rotation.

Speaking the Language of Revenue: Why Sales Teams Close Faster.

- Board-Ready Narratives. Outputs read like executive briefs—linking technical failures to customer impact and dollars lost or saved.

- Rapid Proof-of-Value. API-level integration means prospects can replay their own incidents in a sandbox within weeks. Seeing their logs solved faster than incumbent tools convert curiosity into budget.

- SLA Weaponry. Confidence-ranked root causes can trigger auto-remediation flows: restart a pod, roll back a deployment, or scale a tier—letting vendors promise “self-healing” instead of mere monitoring.

- Differentiation. When every competitor claims “AI-driven observability,” the one offering temporal-hypergraph RCA with auditable explanations wins the bake-off.

The Road to Autonomous Reliability

Root causes mapped to code commits, config drifts, or infrastructure tweaks become part of a living knowledge base. Over time, reinforcement policies learn patterns such as: “If Monday cache usage exceeds 85 % for 10 min, auto-scale cache cluster by 20 %.”

The platform graduates from post-mortem detective to pre-mortem oracle, nudging systems before they wobble. That transformation—from reactive firefighting to predictive self-healing—marks the new frontier of digital resilience.

To Try out the Fujitsu KG Enhanced RAG for LA

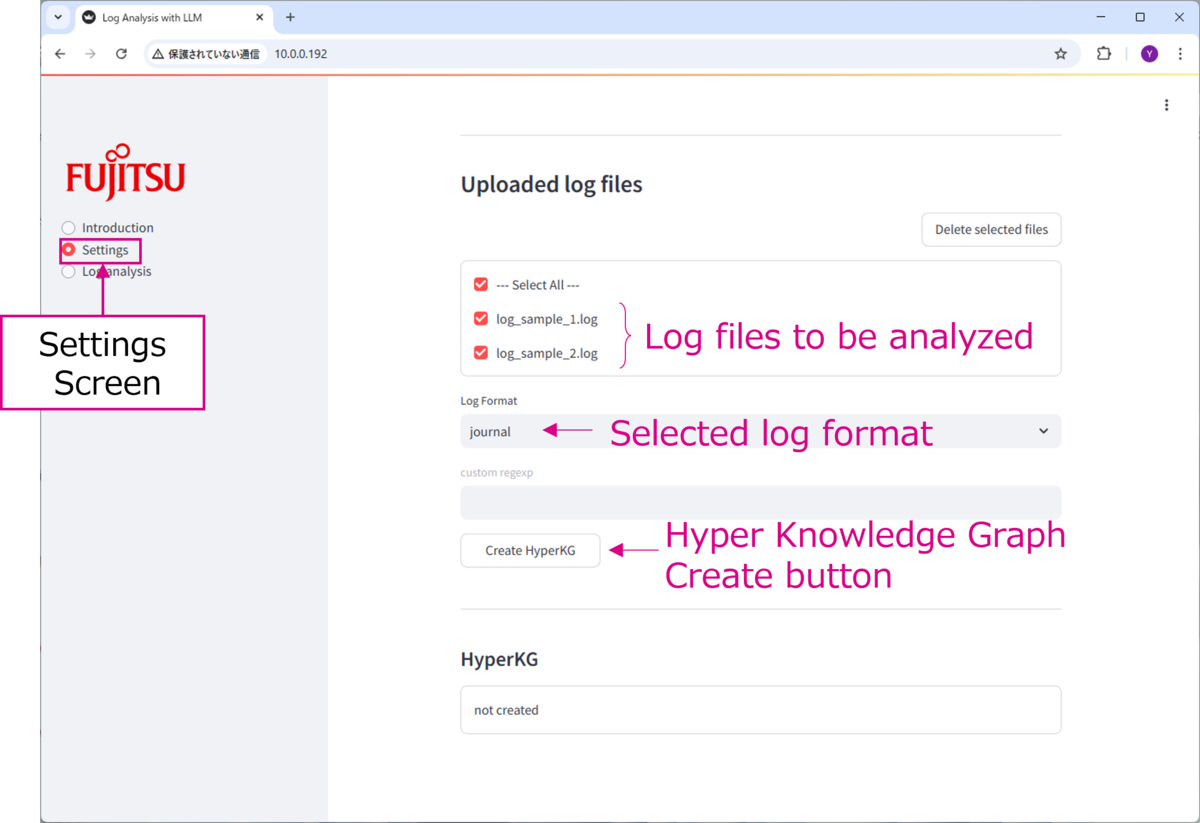

This technology is positioned as one of the "AI Core Engines" in Fujitsu Kozuchi, a platform that allows users to quickly try out advanced AI technologies researched and developed by Fujitsu. It has been released as a web application under the name "Fujitsu Knowledge Graph enhanced RAG for Log Analysis".

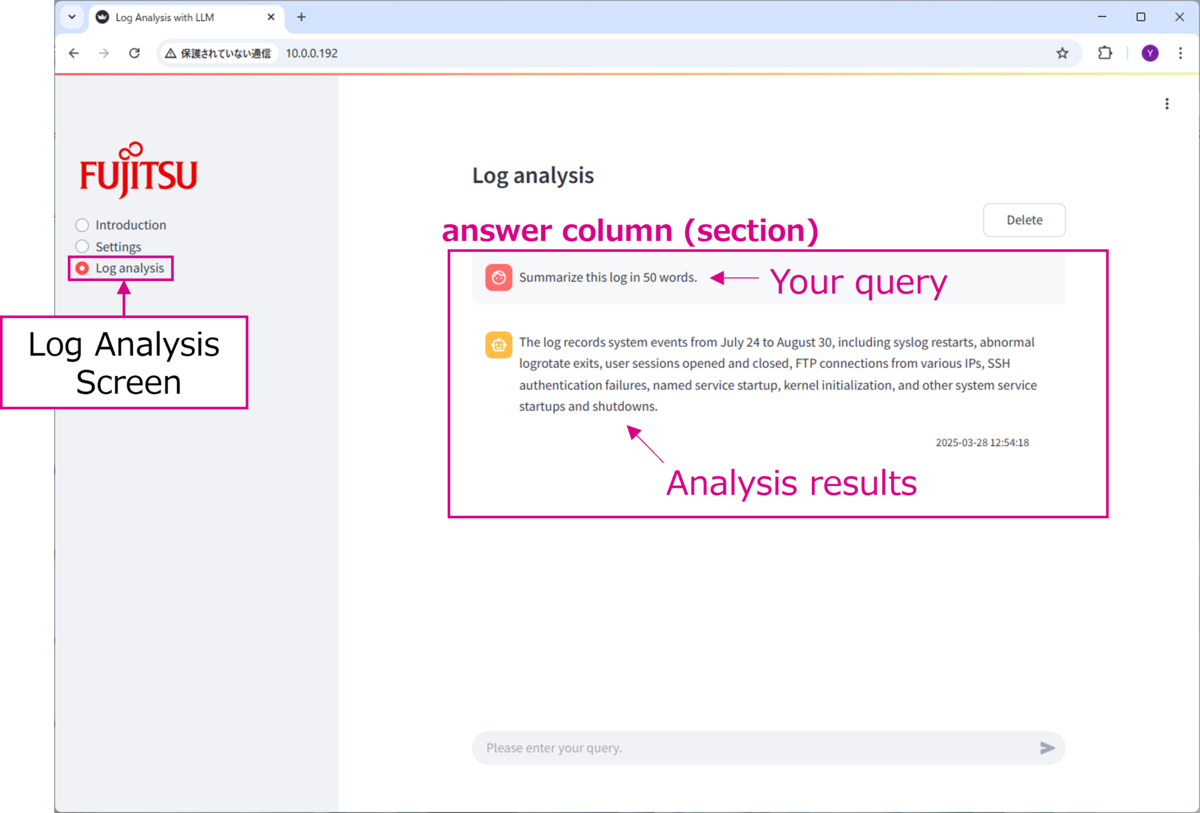

The figures below show an example screen of this web application. Upload the log files and press the "Create HyperKG" button. After that, you can get detailed answers by simply entering questions about the log in the chat screen. If you are interested, feel free to give it a try.

Conclusion: Turn Log Chaos into Competitive Advantage.

Data volumes will keep exploding, micro-services multiplying, and customer tolerance for downtime shrinking. Tools that merely search text will drown in that deluge. Fujitsu KG enhanced RAG for LA converts logs into living narratives, serves root causes on a platter, and primes enterprises for autonomous operations.

Related documents

The technology in this article reflects the technology from the following paper.

- HG-InsightLog: Context Prioritization and Reduction for Question Answering with Non-Natural Language Construct Log Data (Bajpai et al., Findings 2025)

Acknowledgement

The technology and demo app in this article were developed by the following members. I would like to take this opportunity to introduce.

- Fujitsu Research of India: Supriya Bajpai, Chandrakant Harjpal, Niraj S.Kumar, Athira Gopal.

- Fujitsu Research - Artificial Intelligence Laboratory: Junki Oura, Yoshihiro Okawa, Tatsuya Kikuzuki, Masatoshi Ogawa.

*1:RAG technology Retrieval Augmented Generation. A technology that combines and extends the capabilities of generative AI with external data sources.