Hello. We are Sonoda and Yoshii from Data & Security Research Lab. We are excited to introduce one of our latest research projects!

With the prevalence of generative AIs, creation of high-fidelity digital fakes has become easier than ever before. Rapid proliferation of such harmful content on the internet has brought with it serious societal risks such as financial frauds, election manipulation, national security compromise, among many others.

Towards addressing these risks, a variety of technologies are being developed at Fujitsu Laboratories, with the overarching goal of enabling trustable AI solutions[1]. The Fujitsu Technology update 2025 [2] provides an overview of these technologies. In this article, we will introduce our technology concerning debiased and trustable deepfake detection.

Let us first walk you through a simple demo of the technology. At the beginning, the video shows a video conference meeting.

The person in the meeting is masquerading as Takahito Tokita, Fujitsu CEO[3], by swapping the face using AI. The video shows the detection result elucidating the fake face with a red rectangle. Within the rectangle, regions which have been instrumental in detecting the fake content have been highlighted. In addition to human faces, documents such as receipts and invoices can also be analyzed with detection results and corresponding explanations.

Our novel Deepfake Detection technology for videos

Existing deepfake detection technologies have notable shortcomings: 1) The detection performance is often biased against certain demographic groups (e.g., race, gender, etc.) 2) Rationale behind the detection result is largely opaque. To overcome these issues, we propose a novel deepfake detection technology which considers both fairness and interpretability. To facilitate fairness, we propose a novel demographic-attribute-aware data augmentation mechanism and to enable interpretability, we introduce a concept extraction module that learns human-interpretable features instrumental in deepfake detection.

Enabling Interpretability while Preserving Fairness

The proposed concept extraction module aims to improve detection reliability while also facilitating interpretable decisions for non-expert users. We enable this by providing visualizations of deepfake detection results in terms of human-interpretable concepts such as facial accessories, skin color, hairstyle, etc. Furthermore, in detecting fake regions, the module takes into consideration, spatio-temporal inconsistencies which are crucial in detecting deepfakes across video frames.

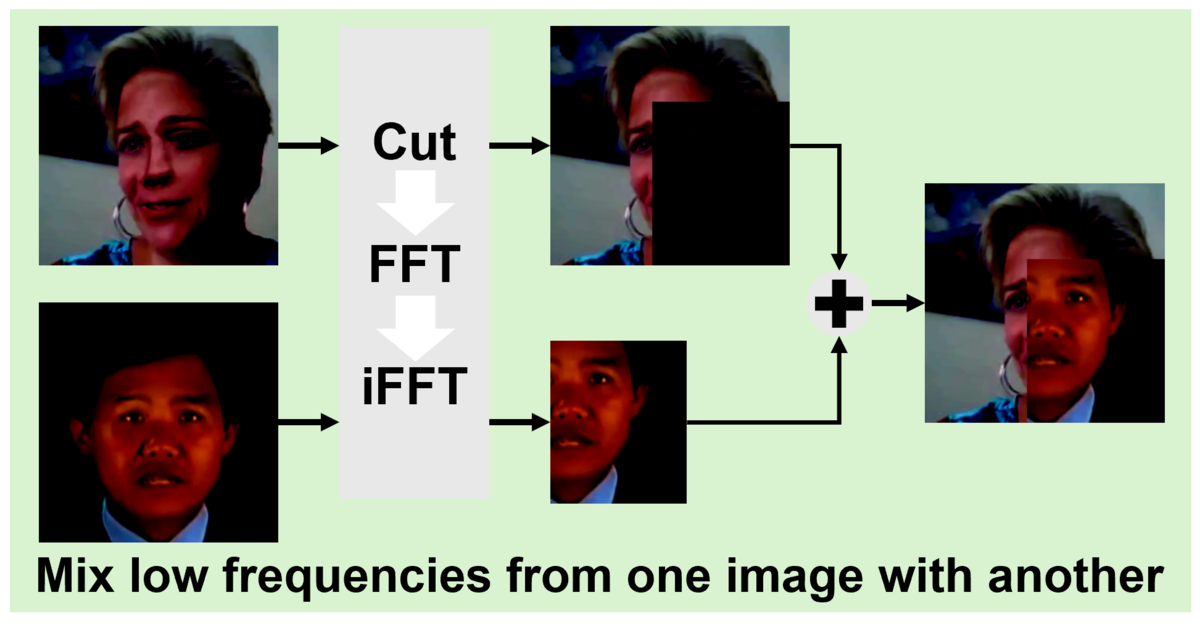

The proposed data augmentation method aims at improving fairness of a deepfake detection model by reducing biases of demographic attributes contained in a training dataset. Existing data augmentation methods such as MixUp and CutMix combines different examples to increase diversity of the training dataset. However, they can erase deepfake traces existing in high-frequency component of an image. Our method adopts data augmentation considering frequency components of an image. Preserving high-frequency components with many deepfake traces, low-frequency components of an image example are combined with another example. Furthermore, examples with different demographic attributes are especially chosen for the data augmentation.

Our overall framework realizes both accuracy and fairness by effectively reducing biases keeping deepfake traces.

Conclusion

While generative AI has enabled many benefits, its malicious use has also contributed to problems such as deepfakes. We are developing a suite of countermeasures against deepfakes. In this article, we introduced our technology concerning deepfake detection in videos, providing an overview of its key features, namely, fairness and interpretability.